ExamSoft: New Evidence from NCBE

Almost a year has passed since the ill-fated July 2014 bar exam. As we approach that anniversary, the National Conference of Bar Examiners (NCBE) has offered a welcome update.

Mark Albanese, the organization’s Director of Testing and Research, recently acknowledged that: “The software used by many jurisdictions to allow their examinees to complete the written portion of the bar examination by computer experienced a glitch that could have stressed and panicked some examinees on the night before the MBE was administered.” This “glitch,” Albanese concedes, “cannot be ruled out as a contributing factor” to the decline in MBE scores and pass rates.

More important, Albanese offers compelling new evidence that ExamSoft played a major role in depressing July 2014 exam scores. He resists that conclusion, but I think the evidence speaks for itself. Let’s take a look at the new evidence, along with why this still matters.

LSAT Scores and MBE Scores

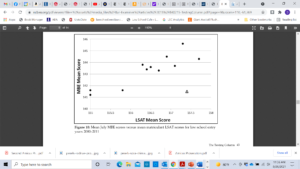

Albanese obtained the national mean LSAT score for law students who entered law school each year from 2000 through 2011. He then plotted those means against the average MBE scores earned by the same students three years later. The graph (Figure 10 on p. 43 of his article) looks like this:

As the black dots show, there is a strong linear relationship between scores on the LSAT and those for the MBE. Entering law school classes with high LSAT scores produce high MBE scores after graduation. For the classes that began law school from 2000 through 2010, the correlation is 0.89–a very high value.

Now look at the triangle toward the lower right-hand side of the graph. That symbol represents the relationship between mean LSAT score and mean MBE score for the class that entered law school in fall 2011 and took the bar exam in July 2014. As Albanese admits, this dot is way off the line: “it shows a mean MBE score that is much lower than that of other points with similar mean LSAT scores.”

Based on the historical relationship between LSAT and MBE scores, Albanese calculates that the Class of 2014 should have achieved a mean MBE score of 144.0. Instead, the mean was just 141.4, producing elevated bar failure rates across the country. As Albanese acknowledges, there was a clear “disruption in the relationship between the matriculant LSAT scores and MBE scores with the July 2014 examination.”

Professors Jerry Organ and Derek Muller made similar points last fall, but they were handicapped by their lack of access to LSAT means. The ABA releases only median scores, and those numbers are harder to compile into the type of persuasive graph that Albanese produced. Organ and Muller made an excellent case with their data–one that NCBE should have heeded–but they couldn’t be as precise as Albanese.

But now we have NCBE’s Director of Testing and Research admitting that “something happened” with the Class of 2014 “that disrupted the previous relationship between MBE scores and LSAT scores.” What could it have been?

Apprehending a Suspect

Albanese suggests a single culprit for the significant disruption shown in his graph: He states that the Law School Admission Council (LSAC) changed the manner in which it reported scores for students who take the LSAT more than once. Starting with the class that entered in fall 2011, Albanese writes, LSAC used the high score for each of those test takers; before then, it used the average scores.

At first blush, this seems like a possible explanation. On average, students who retake the LSAT improve their scores. Counting only high scores for these test takers, therefore, would increase the mean score for the entering class. National averages calculated using high scores for repeaters aren’t directly comparable to those computed with average scores.

But there is a problem with Albanese’s rationale: He is wrong about when LSAC switched its method for calculating national means. That occurred for the class that matriculated in fall 2010, not the one that entered in fall 2011. LSAC’s National Decision Profiles, which report these national means, state that quite clearly.

Albanese’s suspect, in other words, has an alibi. The change in LSAT reporting methods occurred a year earlier; it doesn’t explain the aberrational results on the July 2014 MBE. If we accept LSAT scores as a measure of ability, as NCBE has urged throughout this discussion, then the Class of 2014 should have received higher scores on the MBE. Why was their mean score so much lower than their LSAT test scores predicted?

NCBE has vigorously asserted that the test was not to blame; they prepared, vetted, and scored the July 2014 MBE using the same professional methods employed in the past. I believe them. Neither the test content nor the scoring algorithms are at fault. But we can’t ignore the evidence of Albanese’s graph: something untoward happened to the Class of 2014’s MBE scores.

The Villain

The villain almost certainly is the suspect who appeared at the very beginning of the story: ExamSoft. Anyone who has sat through the bar exam, who has talked to test-takers during those days, or who has watched students struggle to upload a single law school exam knows this.

I still remember the stress of the bar exam, although 35 years have passed. I’m pretty good at legal writing and analysis, but the exam wore me out. Few other experiences have taxed me as much mentally and physically as the bar exam.

For a majority of July 2014 test-takers, the ExamSoft “glitch” imposed hours of stress and sleeplessness in the middle of an already exhausting process. The disruption, moreover, occurred during the one period when examinees could recoup their energy and review material for the next day’s exam. It’s hard for me to imagine that ExamSoft’s failure didn’t reduce test-taker performance.

The numbers back up that claim. As I showed in a previous post, bar passage rates dropped significantly more in states affected directly by the software crash than in other states. The difference was large enough that there is less than a 0.001 probability that it occurred by chance. If we combine that fact with Albanese’s graph, what more evidence do we need?

Aiding and Abetting

ExamSoft was the original culprit, but NCBE aided and abetted the harm. The testing literature is clear that exams can be equated only if both the content and the test conditions are comparable. The testing conditions on July 29-30, 2014, were not the same as in previous years. The test-takers were stressed, overtired, and under-prepared because of ExamSoft’s disruption of the testing procedure.

NCBE was not responsible for the disruption, but it should have refrained from equating results produced under the 2014 conditions with those from previous years. Instead, it should have flagged this issue for state bar examiners and consulted with them about how to use scores that significantly understated the ability of test takers. The information was especially important for states that had not used ExamSoft, but whose examinees suffered repercussions through NCBE’s scaling process.

Given the strong relationship between LSAT scores and MBE performance, NCBE might even have used that correlation to generate a second set of scaled scores correcting for the ExamSoft disruption. States could have chosen which set of scores to use–or could have decided to make a one-time adjustment in the cut score. However states decided to respond, they would have understood the likely effect of the ExamSoft crisis on their examinees.

Instead, we have endured a year of obfuscation–and of blaming the Class of 2014 for being “less able” than previous classes. Albanese’s graph shows conclusively that diminished ability doesn’t explain the abnormal dip in July 2014 MBE scores. Our best predictor of that ability, scores earned on the LSAT, refutes that claim.

Lessons for the Future

It’s time to put the ExamSoft debacle to rest–although I hope we can do so with an even more candid acknowledgement from NCBE that the software crash was the primary culprit in this story. The test-takers deserve that affirmation.

At the same time, we need to reflect on what we can learn from this experience. In particular, why didn’t NCBE take the ExamSoft crash more seriously? Why didn’t NCBE and state bar examiners proactively address the impact of a serious flaw in exam administration? The equating and scaling process is designed to assure that exam takers do not suffer by taking one exam administration rather than another. The July 2014 examinees clearly did suffer by taking the exam during the ExamSoft disruption. Why didn’t NCBE and the bar examiners work to address that imbalance, rather than extend it?

I see three reasons. First, NCBE staff seem removed from the experience of bar exam takers. The psychometricians design and assess tests, but they are not lawyers. The president is a lawyer, but she was admitted through Wisconsin’s diploma privilege. NCBE staff may have tested bar questions and formats, but they lack firsthand knowledge of the test-taking experience. This may have affected their ability to grasp the impact of ExamSoft’s disruption.

Second, NCBE and law schools have competing interests. Law schools have economic and reputational interests in seeing their graduates pass the bar; NCBE has economic and reputational interests in disclaiming any disruption in the testing process. The bar examiners who work with NCBE have their own economic and reputational interests: reducing competition from new lawyers. Self interest is nothing to be ashamed of in a market economy; nor is self interest incompatible with working for the public good.

The problem with the bar exam, however, is that these parties (NCBE and bar examiners on one side, law schools on the other) tend to talk past one another. Rather than gain insights from each other, the parties often communicate after decisions are made. Each seems to believe that it protects the public interest, while the other is driven purely by self interest.

This stand-off hurts law school graduates, who get lost in the middle. NCBE and law schools need to start listening to one another; both sides have valid points to make. The ExamSoft crisis should have prompted immediate conversations between the groups. Law schools knew how the crash had affected their examinees; the cries of distress were loud and clear. NCBE knew, as Albanese’s graph shows, that MBE scores were far below outcomes predicted by the class’s LSAT scores. Discussion might have generated wisdom.

Finally, the ExamSoft debacle demonstrates that we need better coordination–and accountability–in the administration and scoring of bar exams. When law schools questioned the July 2014 results, NCBE’s president disclaimed any responsibility for exam administration. That’s technically true, but exam administration affects equating and scaling. Bar examiners, meanwhile, accepted NCBE’s results without question; they assumed that NCBE had taken all proper factors (including any effect from a flawed administration) into account.

We can’t rewind administration of the July 2014 bar exam; nor can we redo the scoring. But we can create a better system for exam administration going forward, one that includes more input from law schools (who have valid perspectives that NCBE and state bar examiners lack) as well as more coordination between NCBE and bar examiners on administration issues.

Jobs, Bar Exam, ExamSoft, NCBEAbout Law School Cafe

Cafe Manager & Co-Moderator

Deborah J. Merritt

Cafe Designer & Co-Moderator

Kyle McEntee

Law School Cafe is a resource for anyone interested in changes in legal education and the legal profession.

Law School Cafe is a resource for anyone interested in changes in legal education and the legal profession.

Around the Cafe

Subscribe

Categories

Recent Comments

- Law School Cafe on Scholarship Advice

- COVID Should Prompt Us To Get Rid Of New York’s Bar Exam Forever - The Lawyers Post on ExamSoft: New Evidence from NCBE

- Targeted Legal Staffing Soluti on COVID-19 and the Bar Exam

- Advocates Denver on Women Law Students: Still Not Equal

- Douglas A. Berman on Ranking Academic Impact

Recent Posts

- Experiential Education

- The Bot Takes a Bow

- Fundamental Legal Concepts and Principles

- Lay Down the Law

- The Bot Updates the Bar Exam

Monthly Archives

Participate

Have something you think our audience would like to hear about? Interested in writing one or more guest posts? Send an email to the cafe manager at merritt52@gmail.com. We are interested in publishing posts from practitioners, students, faculty, and industry professionals.